The AI revolution – A self-optimizing bucket elevator for alternative fuels

The feeding of alternative fuels (AFs) is a challenging task, due to its inherent volatile material properties. Especially the vertical transport of AF particles in classical bucket elevators can lead to enormous throughput variations and associated conveying problems. This article presents an automatic optimization methodology for bucket elevators based on artificial intelligence and reinforcement learning.

1 Introduction

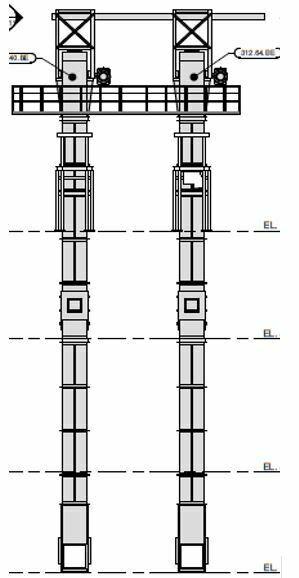

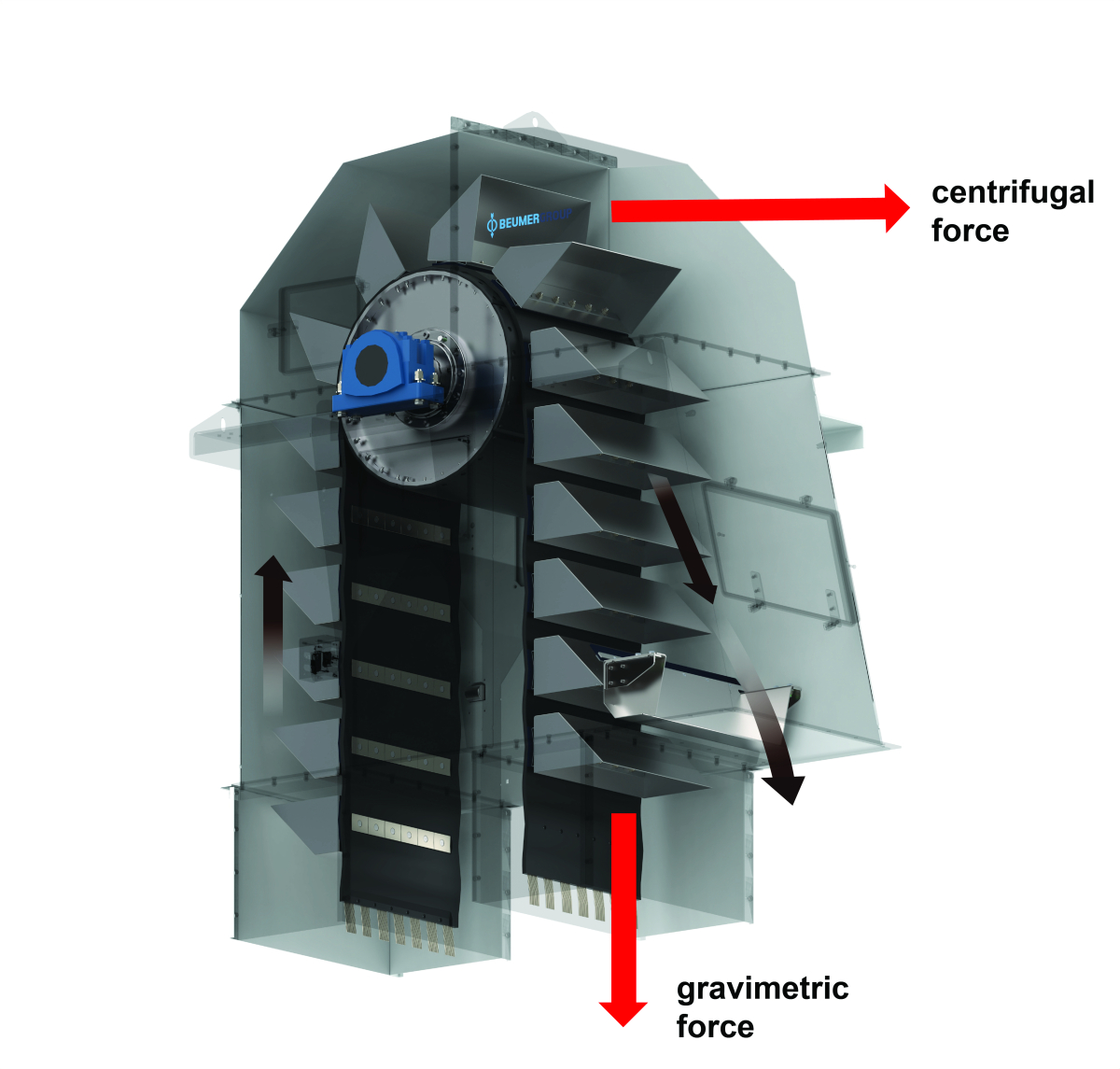

Bucket elevators play a crucial role in cement manufacturing by efficiently transporting raw materials, clinker, and finished products vertically, optimizing space and energy use. With the industry’s shift toward sustainability, bucket elevators are increasingly vital in handling alternative fuels (AF) like biomass, waste-derived fuels, and shredded tires. These fuels, often irregular in size and consistency, require robust and reliable conveying systems to ensure consistent feed into the kiln. Bucket elevators designed for such applications enhance fuel flexibility, reduce carbon emissions, and support the cement industry’s transition to greener operations while maintaining operational efficiency. Figure 1 shows a high capacity belt bucket elevator designed for AF usage as manufactured by the Di Matteo Group.

As indicated in [1], the usage of bucket elevators for the vertical transport of coarse and irregular bulk materials can be challenging, because the specific characteristics of those fuels would lead to an insufficient bucket filling at the inlet of a bucket elevator. Furthermore, the discharge behavior at the upper bucket elevator outlet would lead to further inefficiencies. In a previous study (see [2]) it was evident, that the usage of standard bucket elevator designs for AF material streams lead to major inefficiencies and limited throughput in terms of the achievable mass or volume flow. Therefore, it was quite often evident during the analysis of existing AF feeding installations, that the installed bucket elevators are bottlenecks for a potential increase of AF usage within the clinkering process.

Artificial Intelligence (AI) can optimize bucket elevators in cement manufacturing by tailoring their performance to the specific characteristics of alternative fuels, such as varying size, density, and moisture content. AI algorithms can analyze real-time data from sensors to dynamically adjust operational parameters like belt speed, bucket filling rates, and discharge patterns, ensuring efficient handling of diverse fuel types. By optimizing these processes, AI minimizes energy consumption, reduces spillage, and ensures a consistent feed of alternative fuels into the kiln. This intelligent optimization enhances the overall efficiency of bucket elevators, enabling smoother integration of alternative fuels into cement production and supporting the industry‘s sustainability goals.

This article describes how AI can be used in order to optimize the throughput of a bucket elevator for alternative fuels under varying fuel characteristics. For this, the discharge region of the machine was observed by a high-speed camera in order to measure the current conveying efficiency of the bucket elevator. A so-called reinforcement learning approach is then able to automatically find the optimal process parameters for the bucket elevator. The overall methodology was developed by using a digital twin of the real bucket elevator and was further optimized by using a miniature lab-sized bucket elevator. Finally, a real AF conveying setup was used in order to provide an overall proof of the proposed concept.

The remainder of this article is organized as follows: in section 2 the general setup of the reinforcement learning approach is introduced. In that context the general idea of Reinforcement Learning shall be introduced accordingly. Section 3 summarizes the first implementation of the methodology by using a simulation model of the bucket elevator as a digital twin, while section 4 shows the further optimization by using a miniature bucket elevator model. Finally, section 5 provides insights about the real-world setup and the used materials. Section 6 concludes the whole work.

2 Reinforcement Learning for throughput optimization

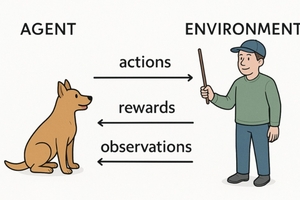

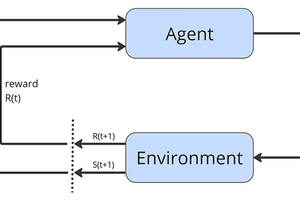

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment to maximize a cumulative reward. The agent takes actions based on its current state, and the environment responds with a new state (see [3]) and a reward signal, which indicates the success or failure of the action. Through trial and error, the agent develops a policy — a strategy for choosing actions — that maximizes long-term rewards. Unlike supervised learning, RL does not rely on labelled data; instead, it learns from feedback received through rewards or penalties. This makes RL particularly effective for dynamic and complex problems, such as game playing, robotics, and optimization tasks, where the optimal solution is not explicitly known but can be discovered through exploration and experience. Imagine teaching a dog to fetch a ball, as illustrated in Figure 2(a). One throws the ball, and the dog runs after it. If the dog brings the ball back to you, you give it a treat (a reward). If the dog doesn’t bring the ball back, it gets nothing. Over time, the dog learns that bringing the ball back leads to a treat, so it starts doing this more often. This is similar to how reinforcement learning works. Figure 2(b) shows the similarity between the example with the dog and the general architecture of a reinforcement learning setup.

The question is, how this general idea can now be used in order to optimise the throughput of a bucket elevator for alternative fuels? In order to answer this question, it shall first be clarified which entity acts as the agent in such a scenario. In the bucket elevator optimisation scenario, the bucket elevator itself can be regarded as the environment, where a control system acts as an agent. It is assumed, that the control system has the power to manipulate specific process parameters and variables of the bucket elevator, e.g. changing the bucket speed. These manipulations can be interpreted as actions of the agent performed on the environment, which leads to a certain behaviour of the bucket elevator, e.g. in terms of throughput or energy efficiency, which can be defined as certain states, which are then rewarded or penalised.

With regards to the exact setup of the reinforcement learning architecture, it is important to define a clear aim for the optimisation. In this paper, the main goal of the work focusses on an optimisation of the throughput of the bucket elevator in terms of a maximisation of the material stream, e.g. in terms of the volume flow V· [LH1] [m³/h]. Noteworthy, other optimisation aims, such as the minimisation of the energy demand of the machine or a reduction of the wear and tear, can also be realised and would just require an adaption of the definition of the rewards and/or the necessary actions and states.

In order to have a sound understanding of the challenges when bucket elevators are used for alternative fuels or other bulk materials with varying characteristics, the following section provides a short overview of typical problematic scenarios.

2.1 Alternative fuels in bucket elevators

The usage of bucket elevators for alternative fuels can be challenging. As described in [4], when alternative fuels are handled by bucket elevators, technical aspects can be described as follows:

2.1.1 Material carryback and spillage

Bucket elevators rely on buckets to scoop and carry materials vertically. However, alternative fuels often have irregular shapes, low bulk densities, or sticky properties, which can lead to material spillage during transport. For example, lightweight biomass or shredded plastics may not remain securely in the buckets, causing them to fall out during ascent or descent. This spillage can accumulate in the elevator boot, leading to blockages and requiring frequent cleaning.

2.1.2 Bucket overfilling or underfilling

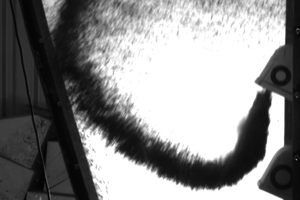

The inconsistent bulk densities and flow properties of alternative fuels can lead to uneven filling of buckets. It was shown in [5] that fluffy or lightweight materials, such as certain types of biomass, may underfill the buckets, reducing the elevator’s throughput. Conversely, denser materials, like shredded tires, may overfill the buckets, causing spillage. This variability in filling can result in operational inefficiencies and increased wear on the elevator components. The following figure provides an example of the problematic filling process of buckets for a Residual Derived Fuel (RDF) containing mainly plastic foils. It can be shown, that this behaviour depends on the transport speed of the buckets vF [m/s] and the specific characteristics of the material. As shown in Figure 3(a) and (c) a typical overfilling for slow speeds leads to spillage within the bucket elevator, while Figure 3(b) and (d) show a typical underfilling for higher speeds. This is quite problematic, because bucket elevators are typically operated with a fixed speed, which leads to situations where varying bulk material properties influence the conveying efficiency and overall operational safety enormously.

2.1.3 Bridging and blockages in the boot section

The boot of a bucket elevator is where material is fed into the buckets. Alternative fuels with high moisture content, fibrous textures, or sticky properties — such as certain types of waste or biomass — can cause bridging or clogging in this area. These blockages can halt the entire elevator, requiring downtime for manual cleaning and disrupting the overall feeding of alternative fuels to the burning process.

2.1.4 Increased wear on buckets, belts/chains and casing

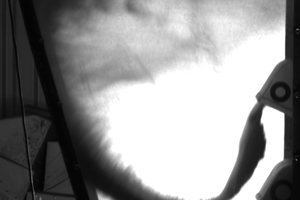

Alternative fuels often contain abrasive or hard contaminants, such as metals, stones, or glass, which can accelerate wear on the buckets, belts, or chains of the elevator. For example, shredded tires may include steel wires that abrade the buckets, while municipal solid waste may contain abrasive particles. This wear reduces the lifespan of elevator components and can lead to frequent replacements and increased maintenance costs. Furthermore, the emptying of AFs from the buckets can also lead to a non-desired behaviour, which leads to constant wear at the buckets elevator casing. Examples for this behaviour can be found in Figure 4.

In conclusion it can be said, that the usage of non-optimised bucket elevators can be challenging for the usage of AF. Especially, since the aforementioned problem may lead to a volatile massflow and a fundamental reduction of the throughput, e.g. due to non-optimal bucket filling and/or non-supportive discharge trajectories.

3 Learning architecture and setup

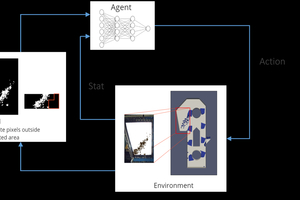

For the setup of the reinforcement learning, the bucket elevator itself would act as the observed environment. The so called state space of the system describes the actual state of the environment at a given time. In the current work, these states are discrete numerical values. Based on these values, the agent computes the probabilities of every possible action along with a value describing how good the chosen action is using the highest probability. As such, these numerical calculations can be performed by means of a Deep Neural Network (DNN). A DNN is a kind of artificial neural network but contains more than one hidden layer, hence the term “deep” is used to describe its architecture. In order to provide a feedback to the agent about the current throughput of the bucket elevator, a visual measurement system was developed, which provides an image-based volume flow estimation based on pixels as a reward (see [6] for more details). The overall setup is visualised in Figure 5. In order to provide a speed-up of the training, the RL-approach was first trained on a Discrete Element Method-based simulation (DEM simulation). Thus, the overall training burden can be essentially reduced, since the real system requires only a transfer learning from a pre-trained model.

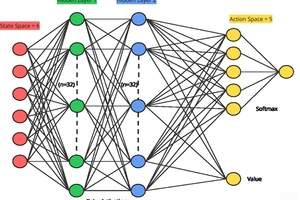

The DNN-based agent contains an architecture, which can be described as follows: The number of the input neurons of the brain is decided by that of the state space, which is six. The number of output neurons is decided by that of the action space, in this case, five. The network consists of two hidden layers with thirty-two neurons each. In between these two hidden layers, an rectified linear unit (ReLu) activation function is used. It filters out all the negative values generated from hidden layer one and, thus, encourages the agent to add non-linearity into the process. Finally, a softmax function is used at the output of the network, i.e., the action space, to generate probabilities for action space. The overall setup is shown in Figure 6.

In the optimization of the bucket elevator process, the state of the system was defined by four important parameters, namely, the speed of the elevator, the type of material used in the simulation (e.g. RDF or wood chips), the rate of infeed of material, and the typical particle size distribution of a particular infeed material. The optimization process was carried out by manipulating the bucket elevator velocity. The operating velocity of the system was established in a range between 0.5 m/s and 2.0 m/s. With the goal of obtaining an optimum velocity of the bucket for a given state of the system, the velocity was found by converting the operating velocity range into a discretized space. Hence, certain magnitude jumps were established and assigned to each action. Thus, in total, 5 actions were available to the agent to perform. Of these, 4 actions were of different magnitude and direction, and an additional action was formulated to repeat the same velocity.

4 Results

The overall approach was tested in a three-staged process: in phase 1 the approach was tested purely on DEM simulations in order to provide a full control of all parameters. After that in stage 2, the process was tested on a miniature bucket elevator model. Finally, an evaluation in a pilot plant at the Di Matteo Group was realised. For all tests mainly classical RDF and wood chips were used as prominent examples of AFs.

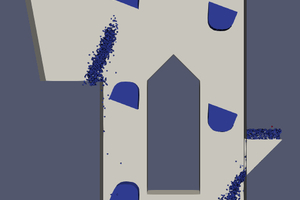

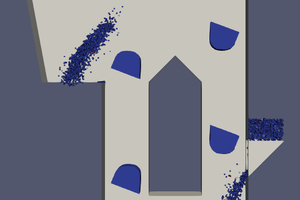

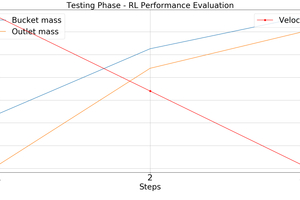

In order to provide a visualisation on how the reinforcement learning approach performs, the following figure provides an example of the situation at the beginning of the operation (Figure 7(a)), during the optimisation (Figure 7(b)) and at the end in an optimised state (Figure 7(c)) obtained by DEM simulation. Furthermore, the changes in speed and throughput are visualised within the associated graph in Figure 7(d).

The initial state of the system in this scenario consisted of an elevator velocity of 1.8 m/s, a constant material infeed rate of 0.5 m/s, and a PSD within the infeed material sample consisting of 21% of 3 cm, 17% of 1.5 cm, and 62% of 0.5 cm radii spheres. It can be seen from the simulation snapshots, that at the elevator speed of 1.55 m/s, most of the material within the discharge parabola belonged to the unwanted region of the reward window. This led to a higher negative reward value. But as the agent progressed towards decreasing the bucket elevator velocity in the wood case, more and more material was driven out of this unwanted red region and towards the outlet, which led to a better reward. The RL agent then stopped the optimization process once almost 95% of material mass within a bucket measured at the inlet was discharged at the outlet section, which in this particular scenario was at the 1.05 m/s bucket elevator velocity. The overall throughput of the bucket elevator was drastically increased by the automatic optimisation.

5 Conclusion

As it was shown in this article, an automatic optimization of the performance of bucket elevators can be achieved by using AI methods, such as the proposed reinforcement learning approach. Together with the AF-optimized design of the bucket elevators from the Di Matteo Group, the overall applicability of bucket elevators for bulk materials with volatile characteristics can be drastically improved. Similar approaches are applicable for other pieces of equipment in the AF processing and feeding lines in order to overcome typical operational challenges associated with AFs. The South Westphalia University of Applied Sciences Laboratories for Industrial Measurement (iMe) and Engineering Mechanics of the South Westphalia in Soest are currently working on several projects in order to use AI and digital twins to optimize classical process within the cement and minerals industry.

Funding Note

This research is partially funded by the German Federal Ministry of Economic Affairs and Energy under the auspices of the Central Innovation Program of Small- and Medium-Sized Enterprises (SMEs), project number KK5298302CM1. The authors would like to thank all involved persons for their precious support.